|

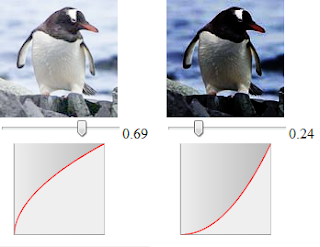

| This is a screenshot of the user interface for the procedural texture app. |

The interface is simple. There's a text box where you can type some code (see illustration at right). Whatever you type there will be executed against every pixel of the 2D canvas. Exposed globals include:

x -- the x coordinate of the current pixel

y -- the y coord of the current pixel

w -- the width of the canvas, in pixels

h -- the height of the canvas

r -- the red channel of the current pixel

g -- the green value of the current pixel

b -- the blue value of the current pixel

PerlinNoise.noise( u,v,w ) -- Perlin's 3D noise function

Offhand, you wouldn't think a loop that calls a callback for every pixel of a canvas image would be fast, but in reality the procedural shader can "call out" at a rate of over a million pixels per second. If you make calls to the Perlin noise() function in your loop, that'll slow you down to ~120K pixels per second. But that's still pretty good.

The versatility of the noise() function is truly amazing. The key to using it effectively is to understand how to scale it. By appropriately scaling the x and y parameters, you can stretch the noise space to any degree you want. You can achieve very colorful results, of course, by applying the result in creative ways to the r, g, and b channels. For example:

This texture was achieved with the following shader code:

n = PerlinNoise.noise(x/45,y/120, .89);

n = Math.cos( n * 85);

r = Math.round(n * 255);

b = 255 - r;

g = r - 255 ;

In this instance, the noise is scaled differently in x and y and then "reflected back on itself" (so to speak) using the cosine function, then the color channels are fiddled in such a way that whatever isn't red is blue.

By normalizing the texture space in various ways, you can end up with surprising effects. For example, consider:

centerx = w/2; centery = h/2;

dx = x - centerx; dy = y - centery;

dist = (dx*dx + dy*dy)/6000;

n = PerlinNoise.noise(x/5,y/5,.18);

r = 255 - dist*Math.round(255*n);

g = r - 255; b = 0;

In this case, we calculate the pseudo-distance from the center of the image as dx*dx + dy*dy (scaled by 6000) and fiddle with the colors to make the result red on a black background. The parameters to noise() have been scaled to give a relatively fine-grain noise.

If you download the code for the procedural-shader page (given further below), you can play with this "texture" yourself. Try substituting larger or smaller values for the scaling numbers to see what happens.

A dramatically different effect can be obtained by normalizing x and y and applying trig functions creatively:

x /= w; y /= h; sizex = 1.5; sizey=10;

n=PerlinNoise.noise(sizex*x,sizey*y,.4);

x = (1+Math.cos(n+2*Math.PI*x-.5));

x = Math.sqrt(x); y *= y;

r= 255-x*255; g=255-n*x*255; b=y*255;

Again, if you decide to download the code yourself, try playing with the various sizing parameters to see what the effect on the image is. That's the best way to get a feel for what's going on.

As you know if you've played with procedural textures before, you get a lot of mileage by normalizing x and y first (to keep them in the range of 0..1) and then using functions on them that are also normalized to produce output in the range 0..1. (Sine and cosine can, of course, easily be normalized to stay in the range 0..1.) It goes without saying that once a number is in the range 0..1 it can be squared (or squared-rooted) and still fall in the range 0..1. When you're ready to apply the number to a color channel, then of course you should multiply by 255 so that the result is in the range 0..255.

I've included a number of "presets" in the procedural-texture page (including code for the foregoing images). Here's another one that I like:

x/=w;y/=h;

size = 20;

n = PerlinNoise.noise(size*x,size*y,.9);

b = 255 - 255*(1+Math.sin(n+6.3*x))/2;

g = 255 - 255*(1+Math.cos(n+6.3*x))/2;

r = 255 - 255*(1-Math.sin(n+6.3*x))/2;

I call this the "noisy rainbow." Without the noise term, it simply paints a rainbow across the image space, but a little added noise gives the effect shown here.

The code includes a few more examples (that aren't shown here). I encourage you to download it and play with it. Simply copy and paste all of the code below into a text file and give it a name that ends in ".html". Then open it in Chrome, Firefox, or any canvas-capable browser.

<html>The texture presets have been placed in a hidden div containing a bunch of span elements, and then at runtime the HTML dropdown menu is populated by loadHiddenText().

<head>

<script>

// A canvas demo by Kas Thomas.

// http://asserttrue.blogspot.com

// Use as you will, at your own risk.

context = null;

canvas = null;

window.onload = function(){

canvas = document.getElementById("myCanvas");

canvas.addEventListener('mousemove', handleMousemove, false);

context = canvas.getContext("2d");

loadHiddenText();

}

function loadHiddenText( ) {

var options = document.getElementsByTagName( "option" );

var spans = document.getElementsByTagName( "span" );

for (var i = 0; i < options.length; i++)

options[i].value = spans[i].innerHTML;

}

// should probably be called resetCanvas()

function clearImage( ) {

canvas.width = canvas.width;

}

function drawViaCallback( ) {

var w = canvas.width;

var h = canvas.height;

var canvasData = context.getImageData(0,0,w,h);

for (var idx, x = 0; x < w; x++) {

for (var y = 0; y < h; y++) {

// Index of the pixel in the array

idx = (x + y * w) * 4;

// The RGB values

var r = canvasData.data[idx + 0];

var g = canvasData.data[idx + 1];

var b = canvasData.data[idx + 2];

var pixel = callback( [r,g,b], x,y,w,h);

canvasData.data[idx + 0] = pixel[0];

canvasData.data[idx + 1] = pixel[1];

canvasData.data[idx + 2] = pixel[2];

}

}

context.putImageData( canvasData, 0,0 );

}

function fillCanvas( color ) {

context.fillStyle = color;

context.fillRect(0,0,canvas.width,canvas.height);

}

function doPixelLoop() {

var code = document.getElementById("code").value;

var f = "callback = function( pixel,x,y,w,h )" +

" { var r=pixel[0];var g=pixel[1]; var b=pixel[2];" +

code + " return [r,g,b]; }";

try {

eval(f);

fillCanvas( "#FFFFFF" );

drawViaCallback( );

}

catch(e) { alert("Error: " + e.toString()); }

}

function handleMousemove (ev) {

var x, y;

// Get the mouse position relative to the canvas element.

if (ev.layerX || ev.layerX == 0) { // Firefox

x = ev.layerX;

y = ev.layerY;

} else if (ev.offsetX || ev.offsetX == 0) { // Opera

x = ev.offsetX;

y = ev.offsetY;

}

document.getElementById("myCanvas").title = x + ", " + y;

}

// This is a port of Ken Perlin's Java code.

PerlinNoise = new function() {

this.noise = function(x, y, z) {

var p = new Array(512)

var permutation = [ 151,160,137,91,90,15,

131,13,201,95,96,53,194,233,7,225,140,36,103,30,69,142,8,99,37,240,21,10,23,

190, 6,148,247,120,234,75,0,26,197,62,94,252,219,203,117,35,11,32,57,177,33,

88,237,149,56,87,174,20,125,136,171,168, 68,175,74,165,71,134,139,48,27,166,

77,146,158,231,83,111,229,122,60,211,133,230,220,105,92,41,55,46,245,40,244,

102,143,54, 65,25,63,161, 1,216,80,73,209,76,132,187,208, 89,18,169,200,196,

135,130,116,188,159,86,164,100,109,198,173,186, 3,64,52,217,226,250,124,123,

5,202,38,147,118,126,255,82,85,212,207,206,59,227,47,16,58,17,182,189,28,42,

223,183,170,213,119,248,152, 2,44,154,163, 70,221,153,101,155,167, 43,172,9,

129,22,39,253, 19,98,108,110,79,113,224,232,178,185, 112,104,218,246,97,228,

251,34,242,193,238,210,144,12,191,179,162,241, 81,51,145,235,249,14,239,107,

49,192,214, 31,181,199,106,157,184, 84,204,176,115,121,50,45,127, 4,150,254,

138,236,205,93,222,114,67,29,24,72,243,141,128,195,78,66,215,61,156,180

];

for (var i=0; i < 256 ; i++)

p[256+i] = p[i] = permutation[i];

var X = Math.floor(x) & 255, // FIND UNIT CUBE THAT

Y = Math.floor(y) & 255, // CONTAINS POINT.

Z = Math.floor(z) & 255;

x -= Math.floor(x); // FIND RELATIVE X,Y,Z

y -= Math.floor(y); // OF POINT IN CUBE.

z -= Math.floor(z);

var u = fade(x), // COMPUTE FADE CURVES

v = fade(y), // FOR EACH OF X,Y,Z.

w = fade(z);

var A = p[X ]+Y, AA = p[A]+Z, AB = p[A+1]+Z, // HASH COORDINATES OF

B = p[X+1]+Y, BA = p[B]+Z, BB = p[B+1]+Z; // THE 8 CUBE CORNERS,

return scale(lerp(w, lerp(v, lerp(u, grad(p[AA ], x , y , z ), // AND ADD

grad(p[BA ], x-1, y , z )), // BLENDED

lerp(u, grad(p[AB ], x , y-1, z ), // RESULTS

grad(p[BB ], x-1, y-1, z ))),// FROM 8

lerp(v, lerp(u, grad(p[AA+1], x , y , z-1 ), // CORNERS

grad(p[BA+1], x-1, y , z-1 )), // OF CUBE

lerp(u, grad(p[AB+1], x , y-1, z-1 ),

grad(p[BB+1], x-1, y-1, z-1 )))));

}

function fade(t) { return t * t * t * (t * (t * 6 - 15) + 10); }

function lerp( t, a, b) { return a + t * (b - a); }

function grad(hash, x, y, z) {

var h = hash & 15; // CONVERT LO 4 BITS OF HASH CODE

var u = h<8 ? x : y, // INTO 12 GRADIENT DIRECTIONS.

v = h<4 ? y : h==12||h==14 ? x : z;

return ((h&1) == 0 ? u : -u) + ((h&2) == 0 ? v : -v);

}

function scale(n) { return (1 + n)/2; }

}

</script>

</head>

<body>

<canvas id="myCanvas" width="300" height="300">

</canvas><br/>

<input type="button" value=" Erase "

onclick="clearImage(); "/>

<select onchange=

"document.getElementById('code').innerHTML = this.value;">

<option>Choose something, then click Execute</option>

<option>Basic Perlin Noise</option>

<option>Waterfall</option>

<option>Spherical Nebula</option>

<option>Green Fibre Bundle</option>

<option>Orange-Blue Marble</option>

<option>Blood Maze</option>

<option>Yellow Lightning</option>

<option>Downward Rainbow Wipe</option>

<option>Noisy Rainbow</option>

<option>Burning Cross</option>

</select>

<br/>

<textarea id="code" type="textarea" cols="40" rows="7">/* Enter code here. */</textarea>

<br/>

<input type="button" value=" Execute "

onclick="doPixelLoop();" />

<input type="button" value="Open as PNG"

onclick="window.open(canvas.toDataURL('image/png'))"/>

<!-- BEGIN HIDDEN TEXT -->

<div hidden="true">

<span>

// you can enter your own code here!

</span>

<span>

x /= w; y /= h;

size = 10;

n = PerlinNoise.noise(size*x,size*y,.8);

r = g = b = 255 * n;

</span>

<span>

x/= 30; y/=3 * (y+x)/w;

n = PerlinNoise.noise(x,y,.18);

b = Math.round(255*n);

g = b - 255; r = 0;

</span>

<span>

centerx = w/2; centery = h/2;

dx = x - centerx; dy = y - centery;

dist = (dx*dx + dy*dy)/6000;

n = PerlinNoise.noise(x/5,y/5,.18);

r = 255 - dist*Math.round(255*n);

g = r - 255; b = 0;

</span>

<span>

x/=w;y/=h;sizex=3;sizey=66;

n=PerlinNoise.noise(sizex*x,sizey*y,.1);

x=(1+Math.sin(3.14*x))/2;

y=(1+Math.sin(n*8*y))/2;

b=n*y*x*255; r = y*b;

g=y*255;

</span>

<span>

centerx = w/2; centery = h/2;

dx = x - centerx; dy = y - centery;

dist = 1.2*Math.sqrt(dx*dx + dy*dy);

n = PerlinNoise.noise(x/30,y/110,.28);

dterm = (dist/88)*Math.round(255*n);

r = dist < 150 ? dterm : 255;

b = dist < 150 ? 255-r : 255;

g = dist < 151 ? dterm/1.5 : 255;

</span>

<span>

n = PerlinNoise.noise(x/45,y/120, .74);

n = Math.cos( n * 85);

r = Math.round(n * 255);

b = 255 - r;

g = r - 255 ;

</span>

<span>

x /= w; y /= h; sizex = 1.5; sizey=10;

n=PerlinNoise.noise(sizex*x,sizey*y,.4);

x = (1+Math.cos(n+2*Math.PI*x-.5));

x = Math.sqrt(x); y *= y;

r= 255-x*255; g=255-n*x*255; b=y*255;

</span>

<span>

// This uses no Perlin noise.

x/=w; y/=h;

b = 255 - y*255*(1 + Math.sin(6.3*x))/2;

g = 255 - y*255*(1 + Math.cos(6.3*x))/2;

r = 255 - y*255*(1 - Math.sin(6.3*x))/2;

</span>

<span>

x/=w;y/=h;

size = 20;

n = PerlinNoise.noise(size*x,size*y,.9);

b = 255 - 255*(1+Math.sin(n+6.3*x))/2;

g = 255 - 255*(1+Math.cos(n+6.3*x))/2;

r = 255 - 255*(1-Math.sin(n+6.3*x))/2;

</span>

<span>

x /= w; y /= h; size = 19;

n = PerlinNoise.noise(size*x,size*y,.9);

x = (1+Math.cos(n+2*Math.PI*x-.5));

y = (1+Math.cos(2*Math.PI*y));

//x = Math.sqrt(x); y = Math.sqrt(y);

r= 255-y*x*n*255; g = r;b=255-r;

</span>

</div>

<!-- END HIDDEN TEXT -->

</body>

</html>

The Perlin noise() function may look intimidating, but it's not, really. It's a port of Ken Perlin's Java-based reference implementation of noise(). See yesterday's post for more information.

In the meantime, I encourage you to use this demo to explore the possibilities of procedural texture creation in HTML5 canvas. I hope you agree with me, it's a lot of fun, and educational as well.